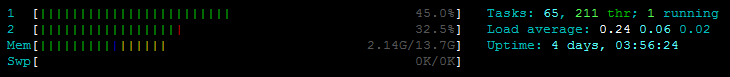

So you could also calculate I/O resource utilisation. There's one for waiting on I/O for example. Now in reality, there is more than one of those processing time clocks. If you're, for instance, trying to balance a bunch of processes on many computers, cpu utilisation is next to useless. There is now no way to differentiate between a machine that is running three tasks, utilising 100% cpu, and a machine running a million tasks, getting barely any work done on any single one of them, also at 100%. In contrast to load, these calculations will always result in a value between 0 and the number of processors, i.e. (But in reality that'd be wrong because of complex things like I/O wait, scheduling inefficiencies and so on) In a perfect world, you could infer from this that system load is 100 - 66.93 = 33,1%. > # the next line will take a while to compute Here's a small python snippet that does this > import time On a multi core machine, this may result in a value of 3.9 of course, because the CPU can calculate four seconds worth of computation every second, if utilised perfectly.

The processor utilisation can now be calculated for a single process: Each and every process on the system 'has got' one of those. That means, only when a process calculates something does the clock run. It only runs if there is processing to do. Imagine you have two clocks, one is called t and it represents real time. Now onto the Percentage values of CPU utilisation: On Linux, by the way, this load is calculated in 10ms intervals (by default). A load of 2.5 for example means that there are 2.5 instructions for every instruction the CPU can deal with in real time.

The length of the queue determines the Load of the system. a process is nice about system resources). Sorting on this queue has to do with the processes' Priority (also called "nice value" - i.e.

When a program goes to sleep, for example, it removes itself from the run queue and returns to the "end of the line" once it's ready to run again. This is where all the instructions of all of the processes line up to wait for their turn to be executed.Īny process puts it's "tasks" on the run queue, and once the processor's ready, it pops them off and executes them. But in the end there is something called a run queue. I won't go into scheduling too much (it's really complicated). It aims to ensure that all of the Processes and their threads get a fair share of computing time. In a modern operating system, there's a Scheduler. Just for future reference (and for fun), let's calculate CPU utilisation! As you've said yourself, you can hit H to show user threads.

0 kommentar(er)

0 kommentar(er)